Select your AI SDK:

Select your AI SDK:

TypeScript + Vercel AI

Python + OpenAI

Install Restate Server & CLI

Restate is a single self-contained binary. No external dependencies needed.Start the server:You can find the Restate UI running on port 9070 (

- Homebrew

- Download binaries

- npm

- Docker

http://localhost:9070) after starting the Restate Server.Get the AI Agent template

Run the AI Agent service

Export your OpenAI key and run the agent:The weather agent is now listening on port 9080.

Register the service

Tell Restate where the service is running (If you run Restate with Docker, register

http://localhost:9080), so Restate can discover and register the services and handlers behind this endpoint.

You can do this via the UI (http://localhost:9070) or via:http://host.docker.internal:9080 instead of http://localhost:9080.Restate Cloud

Restate Cloud

When using Restate Cloud, your service must be accessible over the public internet so Restate can invoke it.

If you want to develop with a local service, you can expose it using our tunnel feature.

Send weather requests to the AI Agent

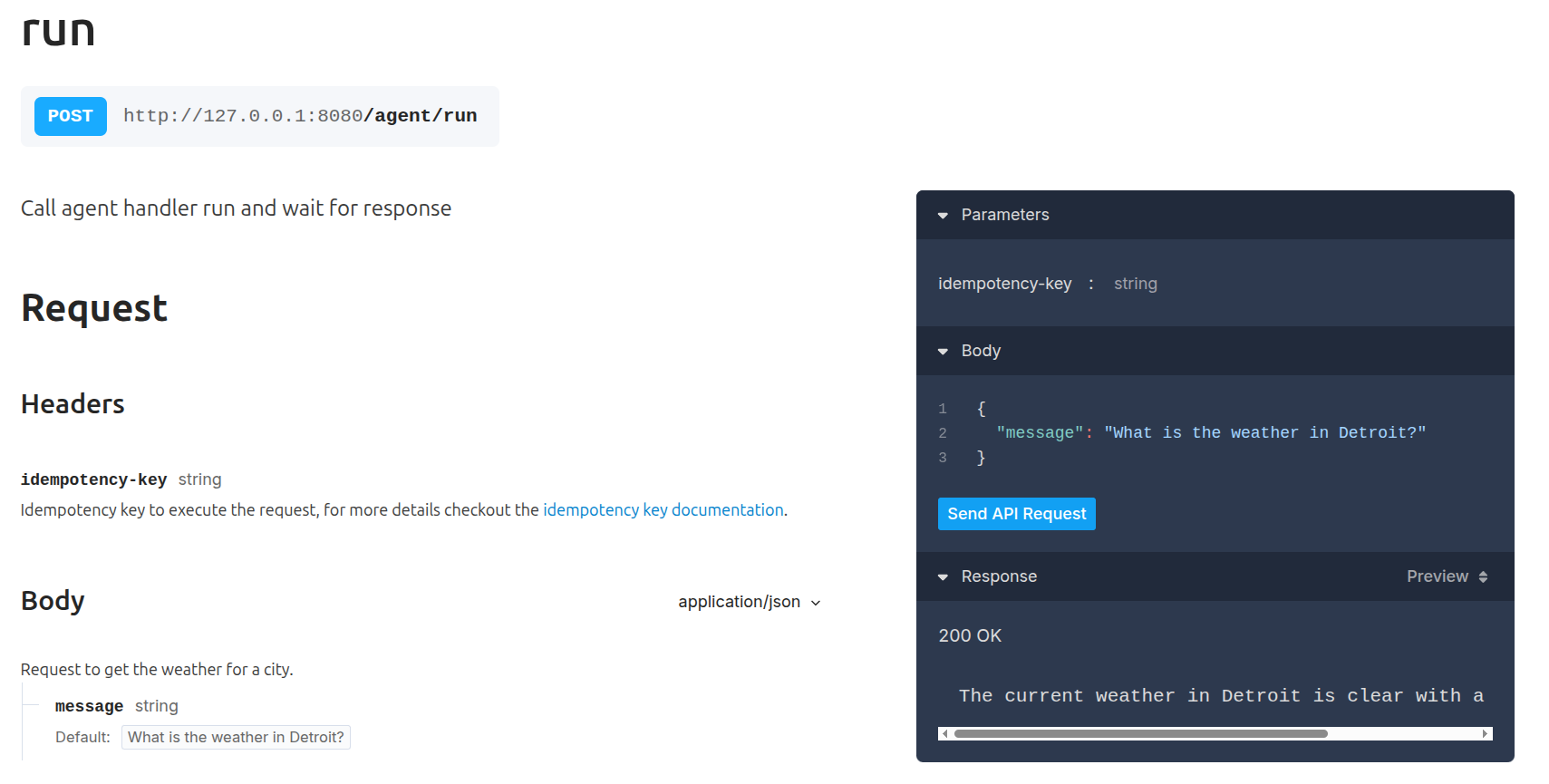

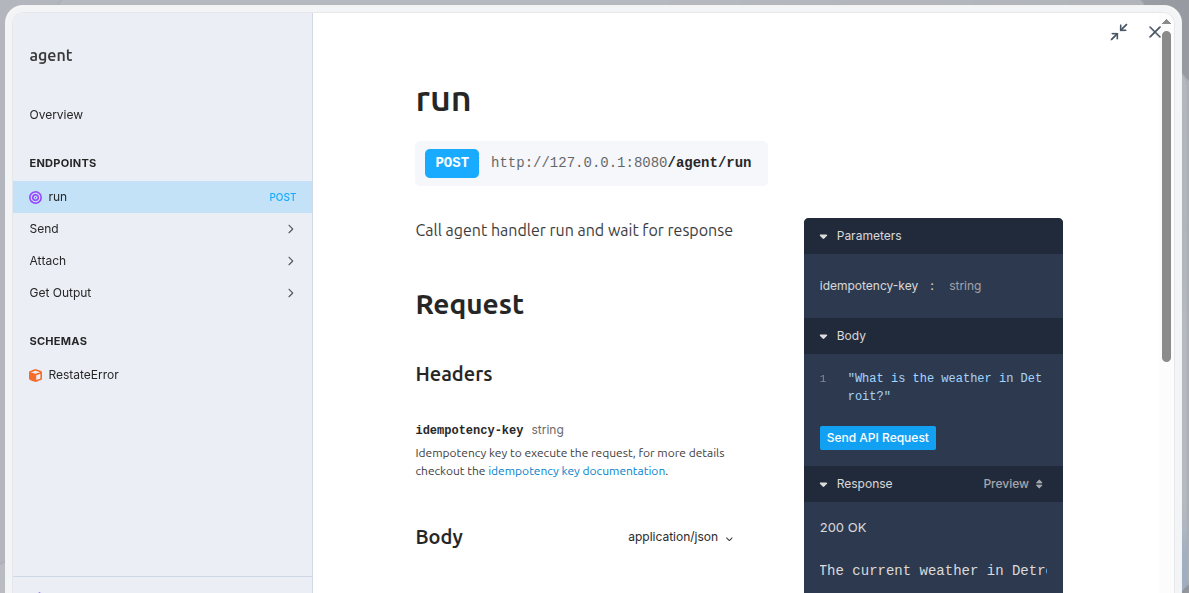

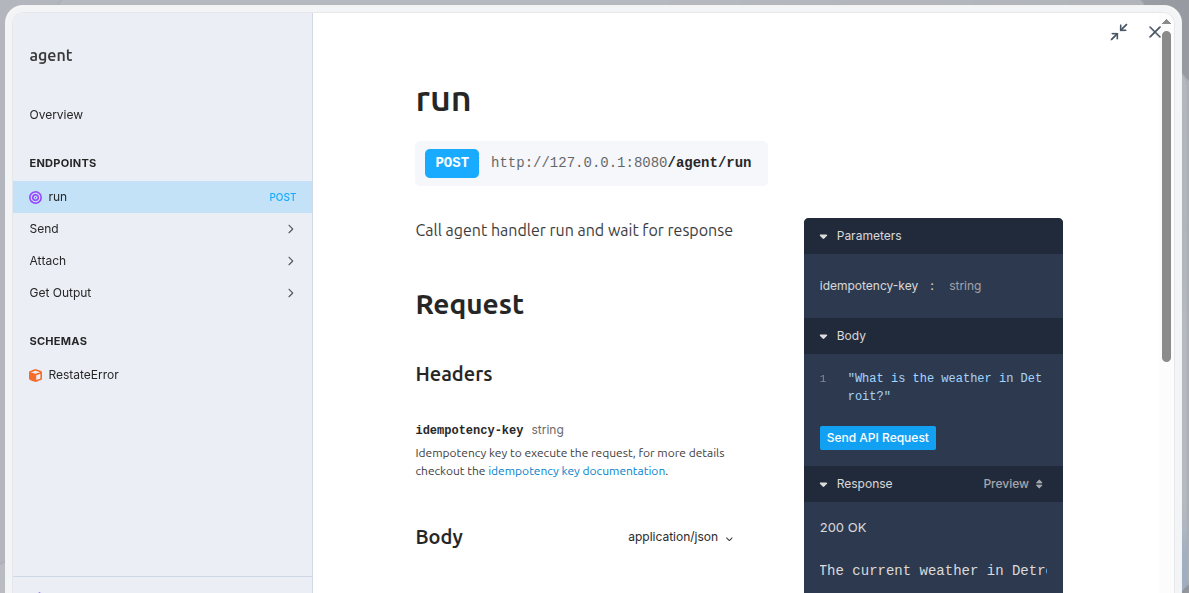

Invoke the agent via the Restate UI playground: go to  Or invoke via Output:

Or invoke via Output:

http://localhost:9070, click on your service and then on playground.

curl:The weather in Detroit is currently 17°C with misty conditions..Congratulations, you just ran a Durable AI Agent!

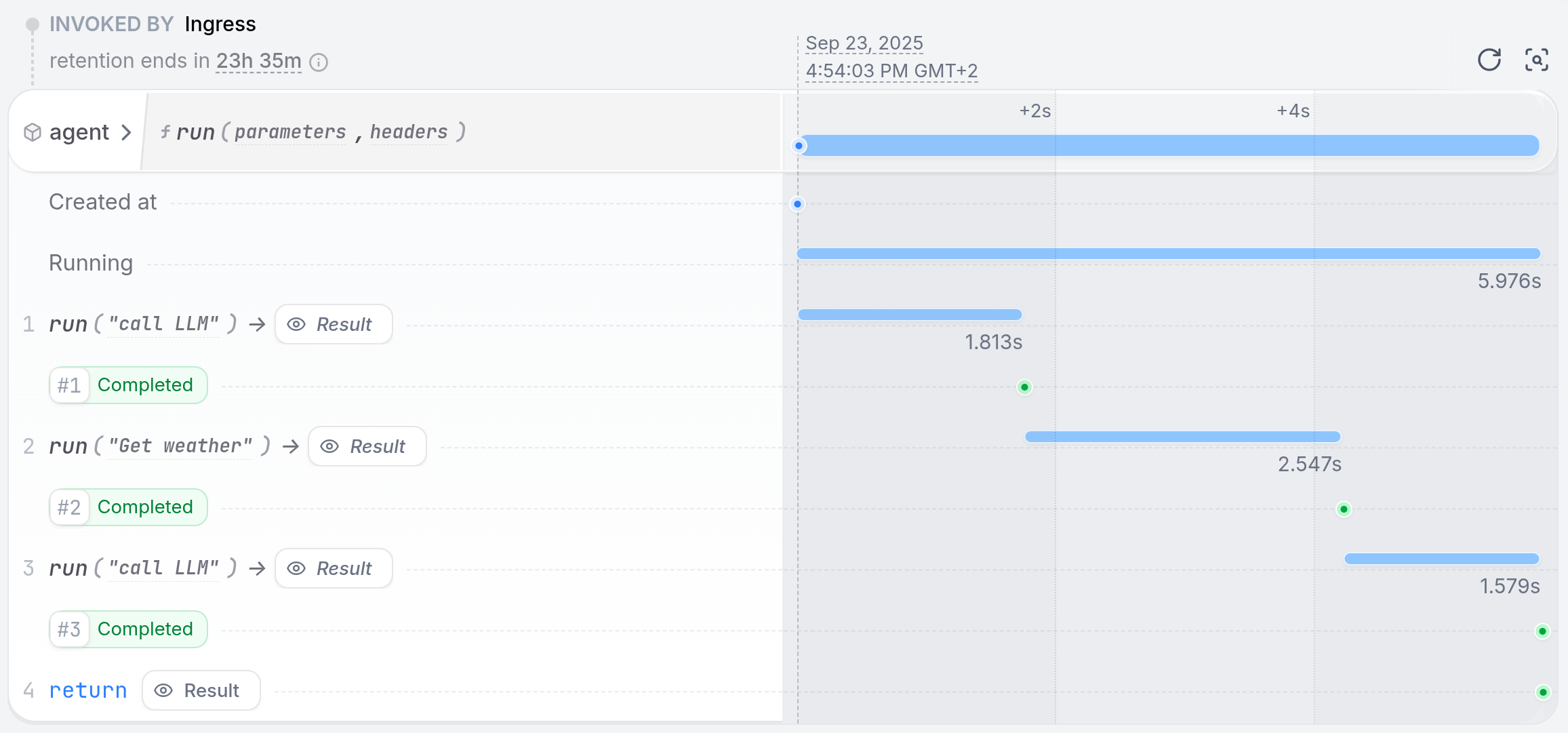

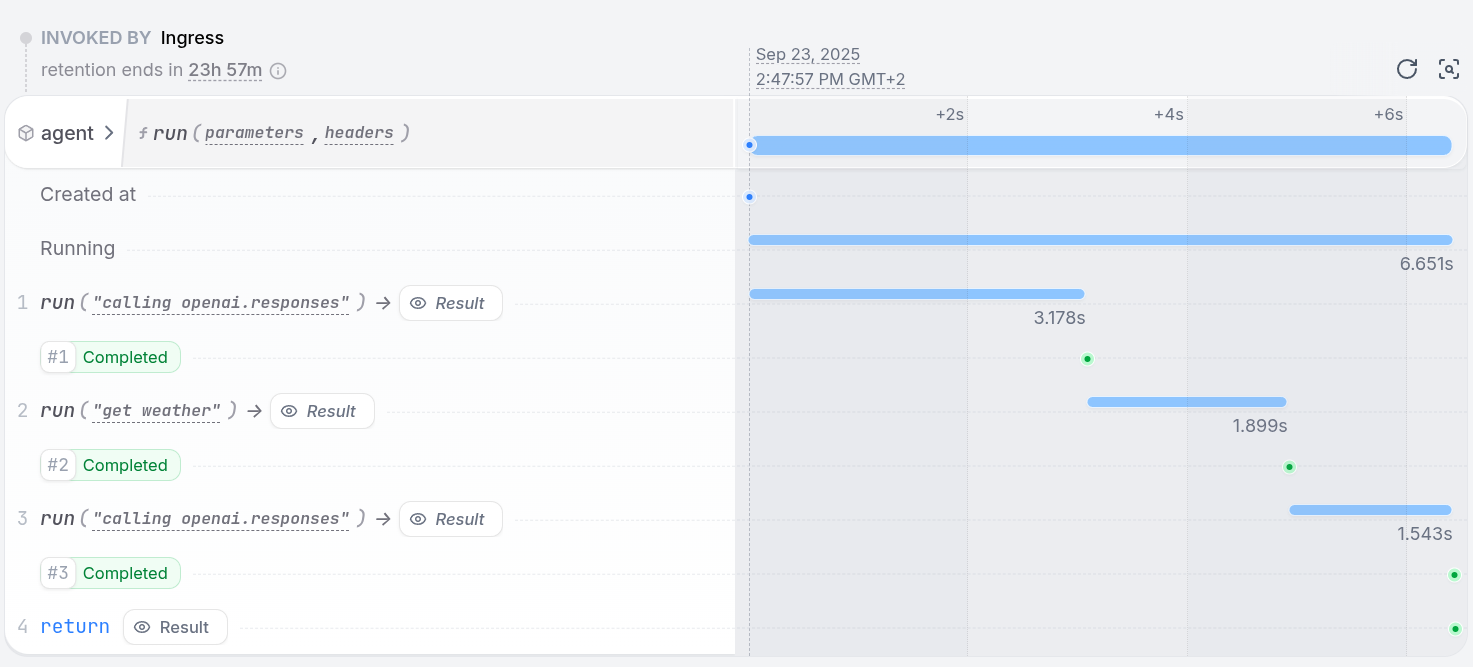

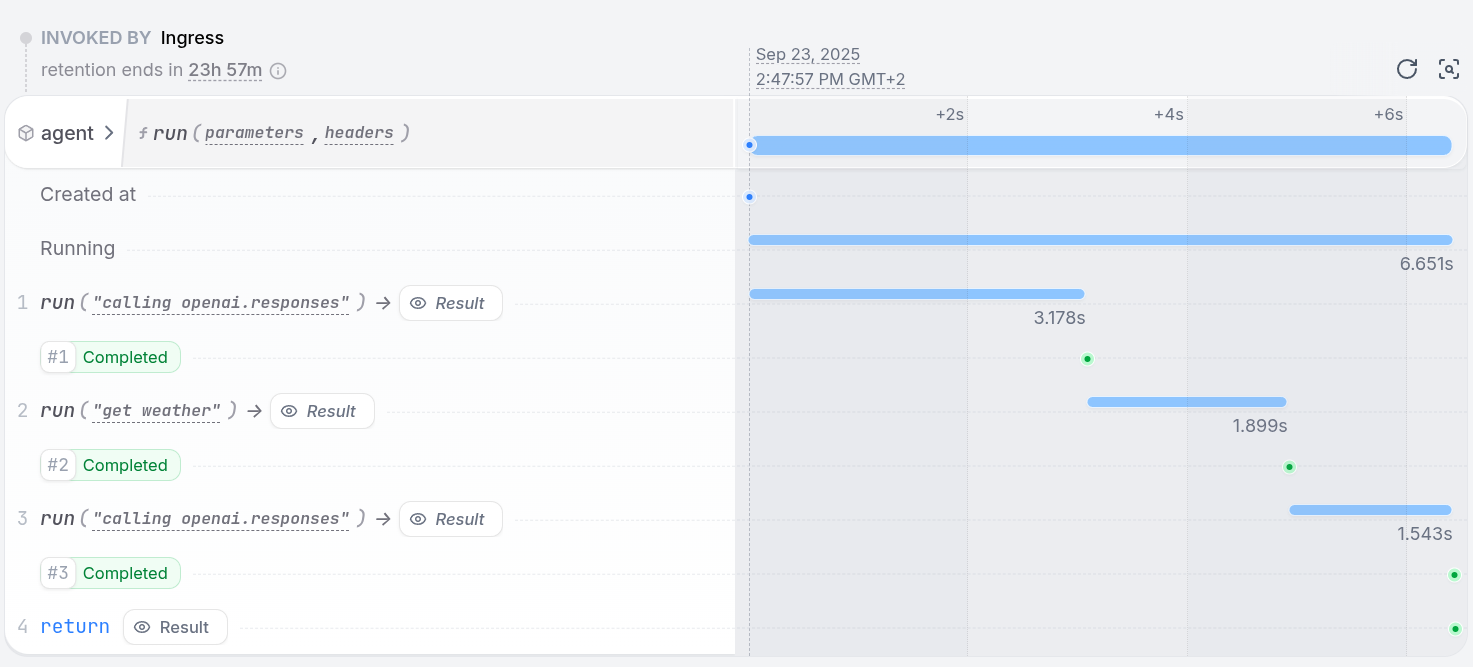

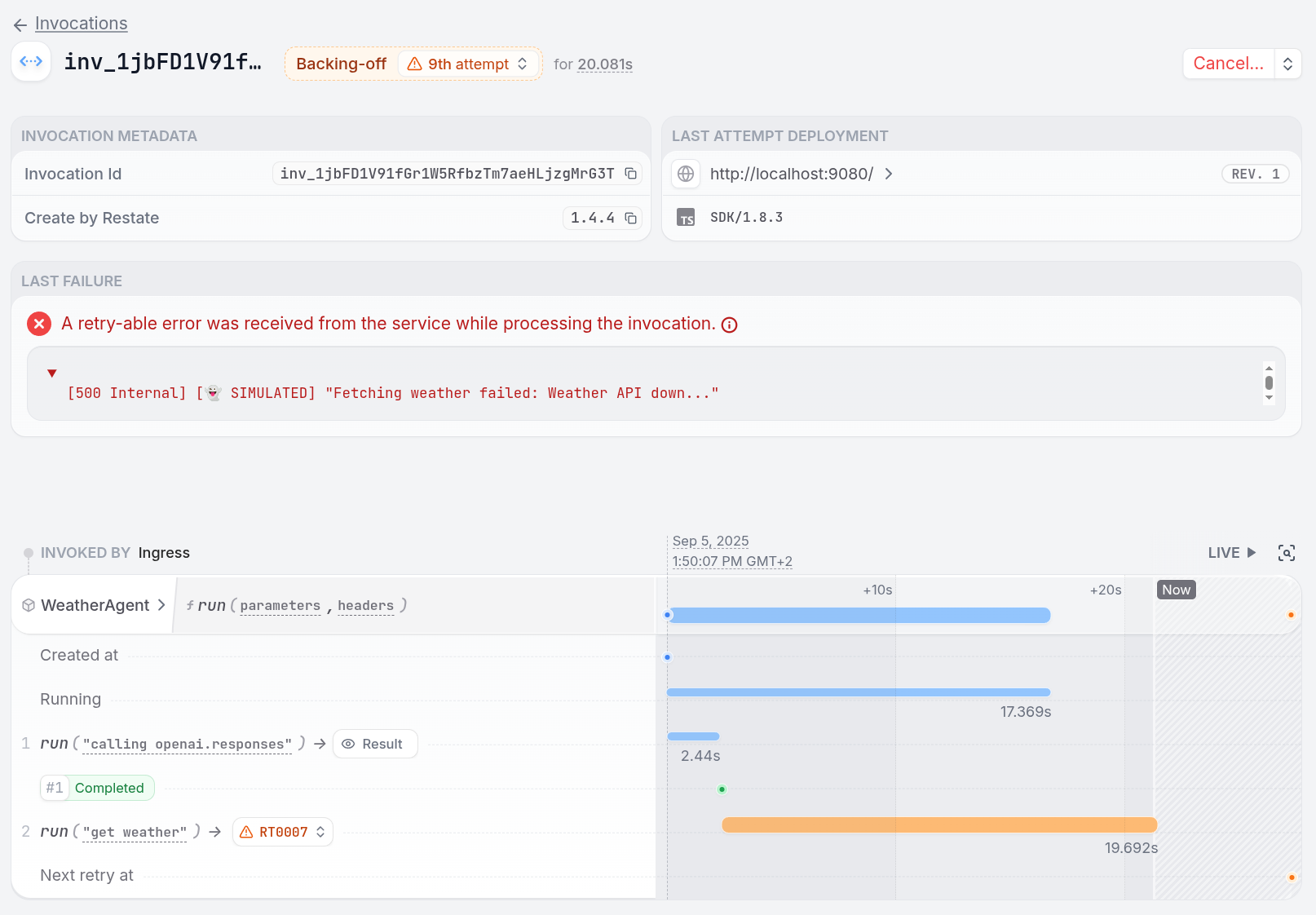

The agent you just invoked uses Durable Execution to make agents resilient to failures. Restate persisted all LLM calls and tool execution steps, so if anything fails, the agent can resume exactly where it left off.We did this by using Restate’s The Invocations tab of the Restate UI shows us how Restate captured each LLM call and tool step in a journal:

Next step:

Follow the Tour of Agents to learn how to build agents with Restate and Vercel AI SDK, OpenAI Agents SDK, etc.

durableCalls middleware to persist LLM responses and using Restate Context actions (e.g. ctx.run) to make the tool executions resilient:

See how a failing tool call is retried

See how a failing tool call is retried

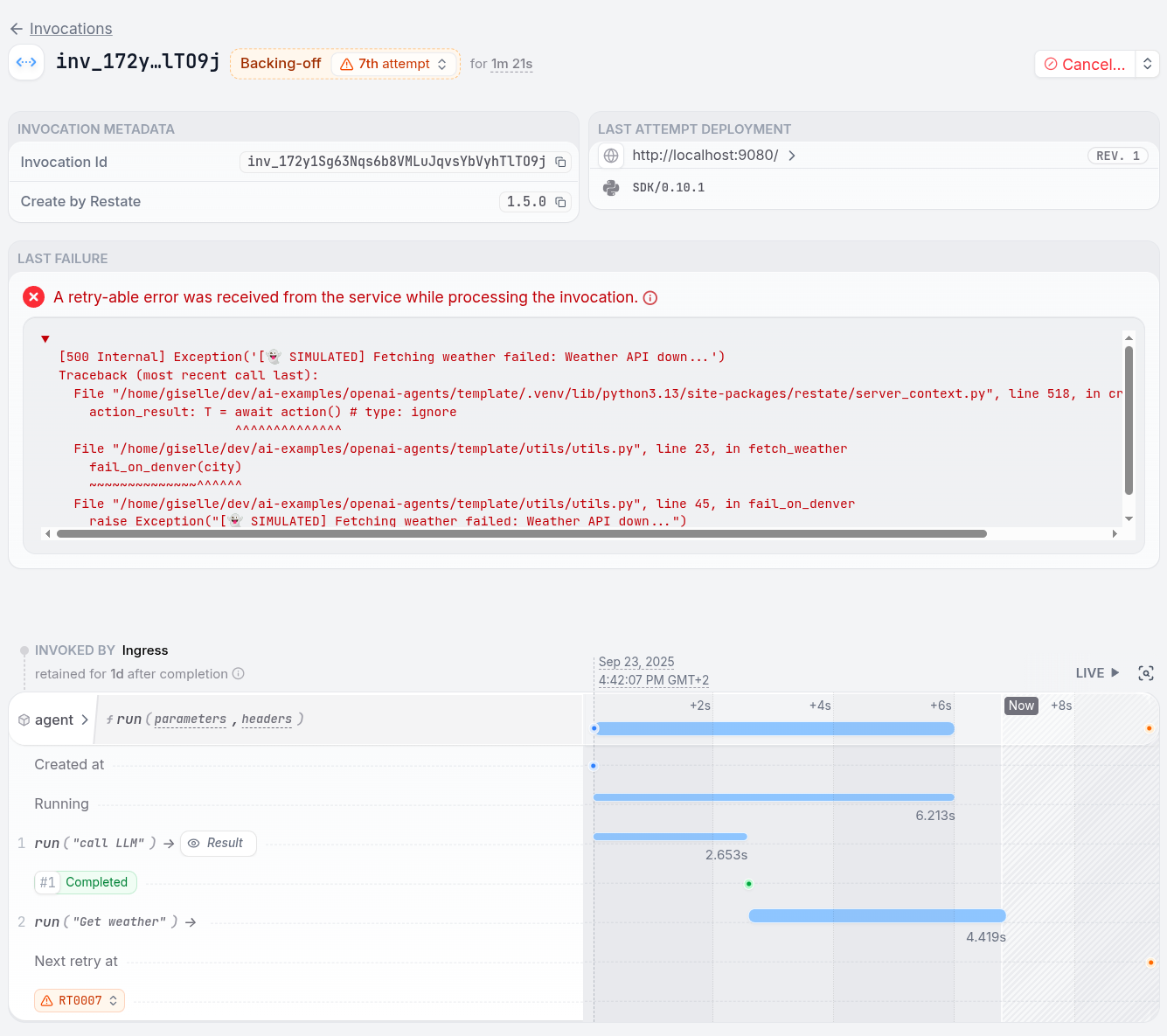

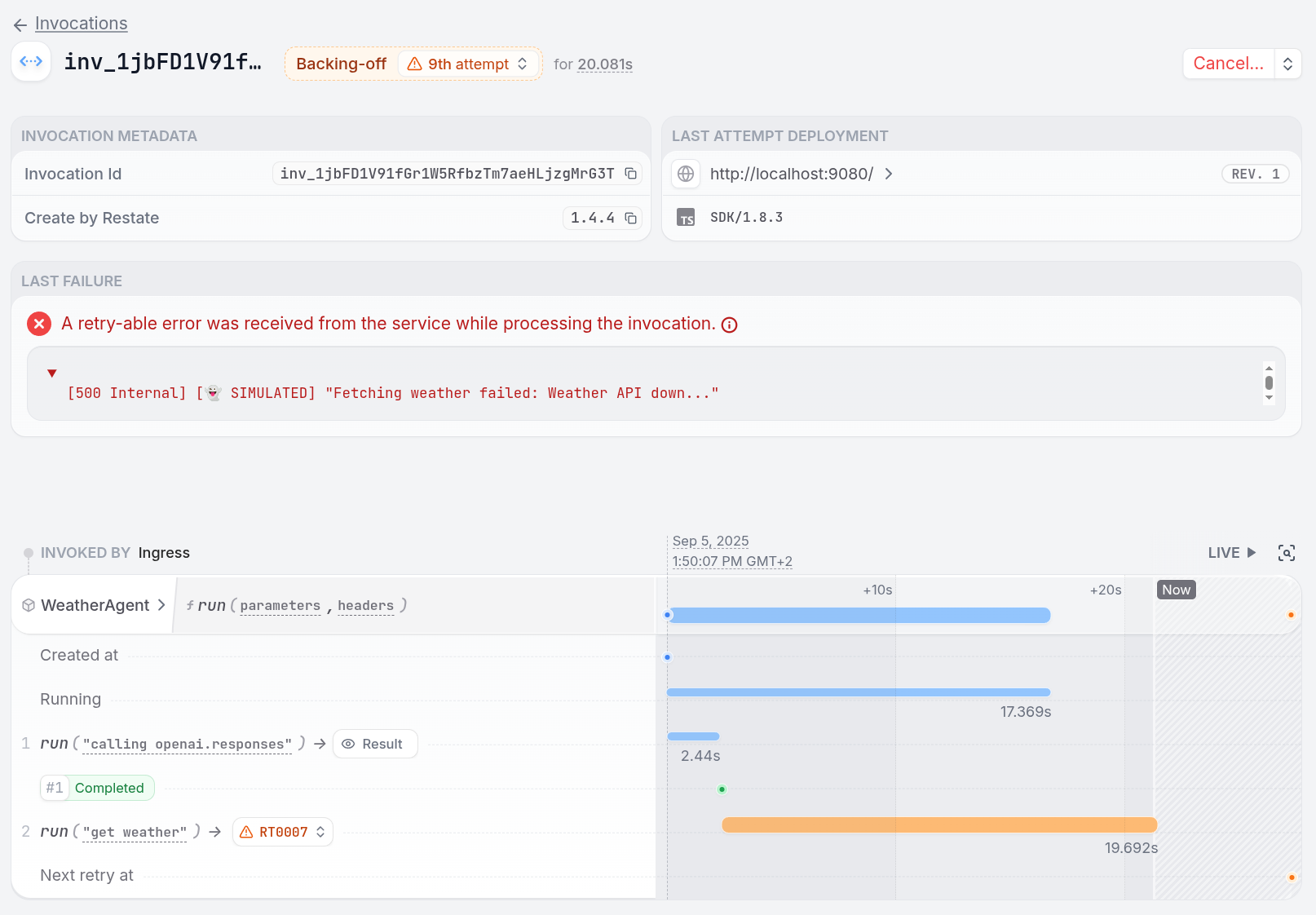

Ask about the weather in Denver:You can see in the service logs and in the Restate UI how each LLM call and tool step gets durably executed.

We can see how the weather tool is currently stuck, because the weather API is down. This was a mimicked failure. To fix the problem, remove the line Once you restart the service, the agent resumes at the weather tool call and successfully completes the request.

This was a mimicked failure. To fix the problem, remove the line Once you restart the service, the agent resumes at the weather tool call and successfully completes the request.

failOnDenver from the fetchWeather function in the utils.ts file: